“There’s no way to get there without a breakthrough,” OpenAI CEO Sam Altman said, arguing that AI will soon need even more energy.

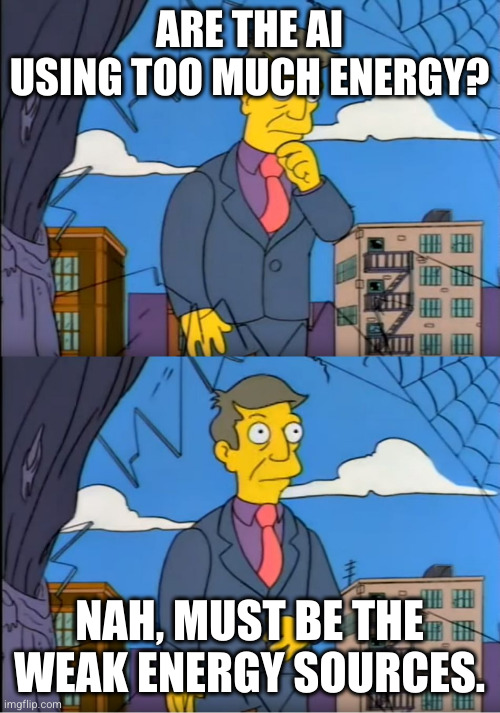

Optimizing power consumption? Why?!

In fairness the computing world has seen unfathomable efficiency gains that are being pushed further with the sudden adoption of arm. We are doing our damnedest to make computers faster and more efficient, and we’re doing a really good job of it, but energy production hasn’t seen nearly those gains in the same amount of time. With the sudden widespread adoption of AI, a very power hungry tool (because it’s basically emulating a brain in a computer), it has caused a sudden spike in energy needed for computers that are already getting more efficient as fast as we can. Meanwhile energy production isn’t keeping up at the same rate of innovation.

The problem there is the paradox of efficiency, making something more efficient ends up using more of it not less as the increase in use stimulated by the greater efficiency outweighs the reduced input used.

It’s not so much the hardware as it is the software and utilisation, and by software I don’t necessarily mean any specific algorithm, because I know they give much thought to optimisation strategies when it comes to implementation and design of machine learning architectures. What I mean by software is the full stack considered as a whole, and by utilisation I mean the way services advertise and make use of ill-suited architectures.

The full stack consists of general purpose computing devices with an unreasonable number of layers of abstraction between the hardware and the languages used in implementations of machine learning. A lot of this stuff is written in Python! While algorithmic complexity is naturally a major factor, how it is compiled and executed matters a lot, too.

Once AI implementations stabilise, the theoretically most energy efficient way to run it would be on custom hardware made to only run that code, and that code would be written in the lowest possible level of abstraction. The closer we get to the metal (or the closer the metal gets to our program), the more efficient we can make it go. I don’t think we take bespoke hardware seriously enough; we’re stuck in this mindset of everything being general-purpose.

As for utilisation: LLMs are not fit or even capable of dealing with logical problems or anything involving reasoning based on knowledge; they can’t even reliably regurgitate knowledge. Yet, as far as I can tell, this constitutes a significant portion of its current use.

If the usage of LLMs was reserved for solving linguistic problems, then we wouldn’t be wasting so much energy generating text and expecting it to contain wisdom. A language model should serve as a surface layer – an interface – on top of bespoke tools, including other domain-specific types of models. I know we’re seeing this idea being iterated on, but I don’t see this being pushed nearly enough.[1]

When it comes to image generation models, I think it’s wrong to focus on generating derivative art/remixes of existing works instead of on tools to help artists express themselves. All these image generation sites we have now consume so much power just so that artistically wanting people can generate 20 versions (give or take an order of magnitude) of the same generic thing. I would like to see AI technology made specifically for integration into professional workflows and tools, enabling creative people to enhance and iterate on their work through specific instructions.[2] The AI we have now are made for people who can’t tell (or don’t care about) the difference between remixing and creating and just want to tell the computer to make something nice so they can use it to sell their products.

The end result in all these cases is that fewer people can live off of being creative and/or knowledgeable while energy consumption spikes as computers generate shitty substitutes. After all, capitalism is all about efficient allocation of resources. Just so happens that quality (of life; art; anything) is inefficient and exploiting the planet is cheap.

For example, why does OpenAI gate external tool integration behind a payment plan while offering simple text generation for free? That just encourages people to rely on text generation for all kinds of tasks it’s not suitable for. Other examples include companies offering AI “assistants” or even AI “teachers”(!), all of which are incapable of even remembering the topic being discussed 2 minutes into a conversation. ↩︎

I get incredibly frustrated when I try to use image generation tools because I go into it with a vision, but since the models are incapable of creating anything new based on actual concepts I only ever end up with something incredibly artistically compromised and derivative. I can generate hundreds of images based on various contortions of the same prompt, reference image, masking, etc and still not get what I want. THAT is inefficient use of resources, and it’s all because the tools are just not made to help me do art. ↩︎

It’s emulating a ridiculously simplified brain. Real brains have orders of magnitude more neurons, but beyond that they already have completely asynchronous evaluation of those neurons, as well as much more complicated connecting structure, as well as multiple methods of communicating with other neurons, some of which are incredibly subtle and hard to detect.

To really take AI to the next level I think you’d need a completely bespoke processor that can replicate those attributes in hardware, but it would be a very expensive gamble because you’d have no idea if it would work until you built it.

This dude al is the new florida man, wonder if it’s the same al from married with children

Unity developers be like.

Some of the smartest people on the planet are working to make this profitable. It’s fucking hard.

You are dense and haven’t taking even a look at simple shit like hugging face. Power consumption is about the biggest topic you find with anyone in the know.

Some of the smartest people on the planet are working to make this profitable. It’s fucking hard.

[Take a look at] hugging face. Power consumption is about the biggest topic you find with anyone in the know.

^ fair comment

The human brain uses about 20W. Maybe AI needs to be more efficient instead?

Perfect let’s use human brains as CPUs then. Not the whole brain just the unused bits.

I’ve seen that film

It’s what matrix would’ve been if the studios didn’t think people would too dumb to get it, so we ended with the nonsense about batteries.

They also thought we wouldn’t understand how Switch could be a woman in the matrix but a man in the real world. So they just made the character a butch woman because apparently that’s easier somehow. So many little changes like this were made.

Holy fuck now her name makes so much more sense. God dammit, why are we so fucking stuck up as a society that we couldn’t even keep that

Tbf that society was a while ago now.

I don’t think it’s gotten better, and honestly they oversimplify even more today. For some reason

Flubber!

We use all of our brain. Well, some of us try to anyway.

I would love it (if there exists a FOSS variant of that) imagine being able to run a LLM, or even LAM in your head,

wait…

🤔

FOSS Neuralink

That would require a revolutionary discovery in material science and hardware.

And yet we have brains. This brute force approach to machine learning is quite effective but has problems scaling. So, new energy sources or new thinking?

We just run the AI for a gazillion epochs and then it’s

overfittedevolved intelligence. Thanks Darwin we did it again.

It’d be way easier to just grow brains instead

We invented computers to do things human brains either couldn’t do, or couldn’t do fast enough.

If only we could convert empty hype into energy.

Well we can, we had a “jumpstyle” wave going on in the Netherlands a couple of years ago. No clue if it ever got off the ground anywhere else seeing as it was a techno thing or something.

It’s like crypto but sliiiighly better

It’s so, so, so much better. GenAI is actually useful, crypto is gambling pretending to be a solution in search of a problem.

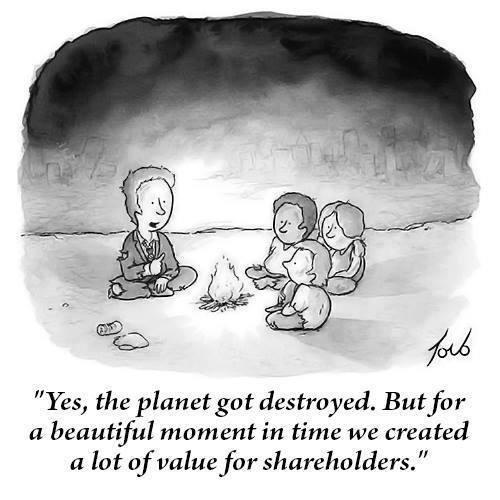

So AI can’t exist without stealing people’s content and it can’t exist without using too much energy. Why does it exist then?

Because the shareholders need more growth. They might create Ultron along the way, but think of the profits, man!

There’s no way these chatbots are capable of evolving into Ultron. That’s like saying a toaster is capable of nuclear fusion.

Thats if you set the toaster to anything above 3

deleted by creator

It’s the further research being done on top of the breakthrough tech enabling the chat bots applications people are worried about. It’s basically big tech’s mission now to build Ultron, and they aren’t slowing down.

What research? These bots aren’t that complicated beyond an optimisation algorithm. Regardless of the tasks you give it, it can’t evolve beyond what it is.

I think we’ve got a bit before we have to worry about another major jump in AI and way longer for an Ultron. The ones we have now are effectively parsers for google or other existing data. I personally still don’t see how we feel like we can get away with calling that AI.

Any AI that actually creates something ‘new’ that I’ve seen still requires a tremendous amount of oversight, tweaking and guidance to produce useful results. To me, they still feel like very fancy search engines.

So AI can’t exist without stealing people’s content

Using the word “steal” in a way that implies misconduct here is “You wouldn’t download a car” level reasoning. It’s not stealing to use the work of some other artist to inform your own work. If you copy it precisely then it’s plagiarism or infringement, but if you take the style of another artist and learn to use it yourself, that’s…exactly how art has advanced over the course of human history. “Great artists steal,” said Picasso famously.

Training your model on pirated copies, that’s shady. But training your model on purchased or freely available content that’s out there for anyone else to learn from? That’s…just how learning works.

Obviously there are differences, in that generative AI is not actually doing structured “thinking” about the creation of a work. That is, of course, the job of the human writing and tweaking the prompts. But training an AI to be able to write like someone else or paint like someone else isn’t theft unless the AI is, without HEAVY manipulation, spitting out copies that infringe on the intellectual property of the original author/artist/musician.

Generative AI, in its current form, is nothing more than a tool. And you can use any tool nefariously, but that doesn’t mean the tool is inherently nefarious. You can use Microsoft Word to copy Eat, Pray, Love but Elizabeth Gilbert shouldn’t sue Microsoft, she should sue you.

Edit: fixed a typo

The models get more efficient and smaller very fast if you look just a year back. I bet we’ll run some small LLMs locally on our phones (I don’t really believe in the other form factors yet) sooner as we believe. I’d say prior 2030.

I can already locally host a pretty decent ai chatbot on my old M1 Macbook (llama v2 7B) which writes at the same speed I can read, its probably already possible with the top of the line phones.

Lol, “old M1 laptop” 3 to 4 years is not old, damn!

(I have running macbookpro5,3 (mid 2009) on Arch, lol)

But nice to hear that M1 (an thus theoretically even the iPad, if you are not talking about M1 pro / M1 max) can already run llamma v2 7B.

Have you tried the mistralAI already, should be a bit more powerful and a bit more efficient iirc. And it is Apache 2.0 licensed.

But nice to hear that M1 (a thus theoretically even the iPad, if you are not talking about M1 pro / M1 max) can already run llamma v2 7B.

An iPhone XR/XS can run Stable Diffusion, believe it or not.

3 to 4 years is not old

Huh, nice. I got the macbook air secondhand so I thought it was older. Thanks for the suggestion, I’ll try mistralAI next, perhaps on my phone as a test.

Because it’s a miracle technology. Both of those things are also engineering problems - ones that have been massively mitigated already. You can run models almost as good as gpt3.5 on a phone, and individuals are pushing the limits on how efficiently we can train every week

It’s not just making a chatbot or a new tool for art - it’s also protein folding, coming up with unexpected materials, and being another pair of eyes that will assist a person do anything.

They literally promise the fountain of youth, autonomous robots, better materials, better batteries, better everything. It’s a path for our species to break our limits, and become more.

The downside is we don’t know how to handle it. We’re making a mess of it, but it’s not like we could stop… The AI alignment problem is dwarfed by the corporation alignment problem

🙄 iTS nOt stEAliNg, iTS coPYiNg

By your definition everything is stealing content. Nearly everything in human history is derivative of others work.

How about an efficiency breakthrough instead? Our brains just need a meal and can recognize a face without looking at billions of others first.

I mean, we can only do that because our system was trained for hundreds of thousands, millions of years into being able to recognise others of same species

Almost all of our training was done without requiring burning fossil fuels. So maybe ole Sammy can put the brakes on his shit until it’s as fuel efficient as a human brain.

Food production and transport is famously a zero emission industry.

We’ve been around for hundreds of thousands of years as homosapiens. Food production and transport emissions were practically 0% until the last 100 years. So, yes, that’s right.

While that is true, a lot of death and suffering was required for us to reach this point as a species. Machines don’t need the wars and natural selection required to achieve the same feats, and don’t have our same limitations.

They are nowhere NEAR achieving the same feats as humans.

But the training has already been done, no?

Erm.

I recall a study about kids under a specific age that cannot get scared of looking at pictures of demons and other horror stuff because they don’t know yet what your everyday default person looks like.

So I’d argue that even people need to get accustomed to a thing before they could recognise or have an opinion about anything.

We still need to look at quite a few. And the other billions have been pre-programmed by a couple of billion years of evolution.

“recognize a face”

Who’s? Can the human brain just know what someone looks like without prior experience?

Your ability to do anything is based on decades of “data sets” that you’re being constantly fed, it’s no different than an AI they just get it all at once and we have to learn by individual experience.

Neurolink plus AI in 10 years is basically the matrix then.

Great right from coin miners to the “AI” fad. Tons of carbon shot into the sky and for what? A more unequal society on both counts.

Sure, we destroyed the planet, but we did it so we could produce valuable artwork like this:

Yeah, I’d say it was worth it. It also gave us this:

🎶🎵 Middle-age mutant ninja turtles,

Middle-age mutant ninja turtles,

Middle-age mutant ninja turtles,

Heros on the Advil!

Turtle Back pain! 🎵 🎶

I can’t decide if I want this to have been written by an AI or not.

I’m not an AI, thought of it a few days ago, and this seemed like a good opportunity to deploy it

Worth every flood and fire

At least AI has the potential to do something useful unlike coin mining. Although its not doing much currently so not to wild about it. Maybe real ai that could actually find new energy sources.

General artificial intelligence has the potential to be actually useful. Generative AI, like Chat GPT (Generative Pre-Trained Transformer) absolutely does not. It’s a glorified autocomplete.

Chat GPT (Generative Pre-Trained Transformer) absolutely does not.

I had to help my wife with an Excel VBA script. I know a few programming languages but I don’t know vba. Hours of googling turned up no useable scripts that did what I needed. ChatGPT wrote a working VBA script in seconds.

Anyone who thinks it is a fancy auto complete hasn’t used it.

It is a fancy auto complete, just an incredibly fancy auto complete. In the same way that a computer that can run VR games, simulate the evolution of solar systems let you access something close to the sum total of human knowledge over the internet is a very fancy pocket calculator.

I think it’s better explained as a search engine that works at the word level of granularity. It lets you do a word level search of all written human knowledge which allows it to adapt to your specific prompt. It’s the next step in searching knowledge based. First we had libraries, then document search, now it’s word search. I think it’ll be impactful on the same level as the creation of libraries and search engines.

I’d tried using it for python and it made up some fake library that does not exist to handle the complex task I was asking it do it. Utterly useless. The way it works is so complicated is simply has you fooled thinking it’s more than a fancy auto complete, but it is literally exactly that. Just the “smartest” auto complete there is. Don’t worry about it, at least you did not fall it love with it and then left your wife to be with ChatGPT, right?

it’s a tool to be used like any other. If you are relying on it to produce working code with no effort on your part then yes it is likely useless. If you are using it to get you most of the way there its very useful. For example, I’m most comfortable writing python but have been doing a lot of c# recently, asking a code focused LLM questions like “how do you rename a column of a c# datatable” is so much quicker and more useful than trying to search through blogspam its not even funny.

As someone that works in IT and has seen the actual real world merit of LLMs, you guys clearly don’t work in a white collar field or you’d realize that you sound like someone in the 90’s claiming the internet is a fad.

Microsoft continues to integrate it, and on prem data and cloud engineers are hooking it up to company resources for everything from helping with data creep to handling low level repetitive tasks.

In the next ten years all bottom level data entry will be performed entirely by on premise AI models and the position of secretary will be a thing of the past, it’s not a gimmick like NFTs or crypto, it’s an actual tool that we are finding more uses for everyday.

VR and Crypto were bullshit, but AI is the real deal. On a side note I find it hilarious that out of the three options, Zuckerberg bet billions on the two wrong options. Unfortunately the rich have so much power that they can make catastrophic mistakes and still have plenty of money to finally bet on the right one.

How is VR bullshit? No Mans Sky, Elite Dangerous, Half Life Alex, more games everyday are using VR.

Unless you mean the “meta verse” decentraland kinda deal then yeah for sure.

It being the future of computer interfaces is bullshit. I enjoy it as a novelty but Zuckerberg bet on it as the “next big thing”, on par with the Internet.

They never stopped to ask if it was actually an improvement and just assumed it’s what everyone wanted.

Too bad some things are just more convenient on a screen in front of you. I don’t want to walk around a virtual grocery store, the website is fine.

Its a little useful. Its another level of meta. initially research was libraries and books/docs but you could get synopsis from atlases, encyclopedias, dictionaries. Internet allowed for gopher searching and then web browsers allowed search engines but the results did not initially have synapses so you had to check each link. Then synapsis allowed looking through links and just checking the most promising ones. generative ai allows for the most promising links to be identified without perusal. One of the most useful things the ai assitants do is provide you the main links they got their info from.

And document search is just a glorified library 🙄

Don’t know why you are being downvoted. This is exactly it. If ChatGPT can find you a solution, then that solution is already out there and it just stole it from some human. That is fundamentally how ChatGPT works, the damn thing can’t think.

How, exactly, do you think learning in humans works? We don’t just spontaneously know things, we build on others works and iterate improvements towards our learning and discovery. You didn’t just know English, you learned it from someone else who learned it ad infinitum until you get back to the earliest stages of complex chemicals we evolved from.

When considered objectively and rationally, intellectual property is among the most insane imaginary bullshit humanity every came up with and actually adopted as a practice, but here we are. Thoughts and prayers.

I heard that the human body can produce more bioelectricity than a battery

Which is bullshit for obvious reasons. Humans are no eels.

In fact, the original script of The Matrix had the machines harvest humans to be used as ultra efficient compute nodes. Executive meddling led to the dumb battery idea .

I love their original idea. Having your brainpower sapped and also being part of a collective dream that creates the world around you is such a cooler and more philosophical idea.

Yea my head cannon is the humans just have no fucking idea what the machines are doing with them and that there was only one movie

This was a far smarter premise. I wonder if it would have been as popular had they kept it.

Tek wars had that premise and just look at how popular that was.

not really. It’s just different energy. Calories can be converted to a unit of heat, a unit of heat is directly analogous to a unit of energy. Electricity is a unit of energy as well. Thus you can compare them. It’s how you compare things like electrical production efficiency of a thermal cycle generation process.

K̵̡̢̛̦̹̩̳̙͉̫̜̳̫̺̀̀͂͂̔̂̅͆̀͆͛̊͐̇̈́̿̚ń̴͕̲͔̖̼̗̊͂́̌̂̀͆̂̿̀͊́̽̽̃̈́̕̚͝ͅò̶͎̱̮̣̜̰̜̥͕̀̂c̸̢̩͓̹͙̲̖̖͎̤͙̥͎̦̦̼͖̩͍̞̪̙̯̺̝̥̑̄̓̋̇͜͝ͅͅk̶̡̛̟̬̳͖̦͓̣̗͈̗̟̥̩͚̤̱̜̰͖̩̊̽̈́̒̉͗̌̈́̐̂̊͐̈́̄͘͠͝͠ ̸̧͉̤̮̗̟͖̩̫̪͙̑͜͠k̷͙͚̀̑͌̀̄͗͜͝͠n̵̢̫̻͉̙̖̱͙̺͌͛́́̇̏̃͝ó̶̜͎̫̺̪̲͓̩͇͖̤̣̻̻̲̲̤̪̜̞̽̀͊̒͗̇͌͆̉̇̄̈́̇͗́͂͜c̷̨̛͚̠̤̼̙̹͓̤̳͔̪͖̰͚͈͓͉̳͍͓͔͎̞͈͈̭̑̂́͌͋́͊͑̇͜k̵̦̞͉̈̒̊̎͂̐̽̏̉́̏̋̀̾̋͛̎̏̿̚̕͝͝͝,̶̳̩͎̩̥͔͉̟̻̘͔̞̗̯͕͕̊̐̂́͋̑̂̑́̌̓̕̕͘͘͜͝͝͝ ̴̮̭̯̳̥͔̘̪͎̦͍̆̎ͅN̴̨̡̧̢̛̛̛͎̹͍͕̥͈̘̜̲͍͓̥̗̭͕̩͉̞̗͕̝͚̺͒̈́̇̿͑̂̍̆͗̒̏͆̓̓͆͘̚͠͝͝͝ẻ̵̛͙̝̰̱͓͔͇̘̼̳͔̳̲̘̞̑͑̈́͛̀̎͛̔́͑͆͂̈́́̓́͐̍̋͒̿̐́̄̃̈́̎͜ọ̷̢̪̬͍̞̩̦̰̟̹̳̬̮̆̊̐̏̈̆͊̐͛̓́̕̚͘.̸̯̮͇̳̮̌̅̈́̎

Didn’t CERN open a portal to hell recently, can’t we just steal their power? What are they using it for what could go wrong?

Didn’t CERN open a portal to hell recently, can’t we just steal their power?

That’s too similar to the plot of Doom and we all know what happened there.

At least in Doom they had sense enough to do it on Mars

Argent Energy is extremely clean but it isn’t ethical, COWARD LIBRALS will complain that it’s made from the eternal tortured souls of the dead

Big Geothermal will try to silence you on this one.

Do we even need a portal to hell, aren’t we living in one?!

Yeah but our hell is out of power. Who cares about that other hell

It’s more of a purgatory, or maybe fantasy has no part in dealing with reality

We could just install some heat pumps in hell and transport the energy via flux pipeline to the overworld.

Reminds me of the Castle Animated series on YouTube.

How many kilowatt hours are in hell I wonder?

All

So is AI the new Blockchain?

Unlike the Blockchain it has an actual use tho.

I bought pizza with bitcoin, haven’t bought anything with AI yet.

I get daily use out of LLMs but haven’t done anything with Bitcoin.

I want to use Bitcoin to buy cannabis and Soylent and have it arrive at my door via drone. Give me my better future

Give an LLM to a high school student and they could do something with it.

Try to explain block chain to an adult and watch them pretend to understand.

Always was.

Or we could stop this ridiculous llm “ai” trend and move towards sustainable living like our hyper-waste society

These comments often indicate a lack of understanding about ai.

Ml algorithms have been in use for nearly 50 years. They certainly become much more common since about 2012, particularly with the development of CUDA, It’s not just some new trend or buzz word.

Rather, what we starting to see are the fruits of our labour. There are so many really hard problems that just cannot be solved with deductive reasoning.

It’s simultaneously possible to realize that something is useful while also recognizing the damage that its trend is causing from a sustainability standpoint, and that neither realization particularly demonstrates a lack of understanding about AI.

Humans very rarely take sustainability into account when money can be made.

The lack of knowledge comes from thinking the damage is outpacing it’s usefulness. It simply isn’t.

Highly debatable

All it costs is power, one of the easiest things to make sustainable until we can make a computer that runs on beans.

AI is already too useful to give up, it’s not “ridiculous”

Why is it ridiculous?

deleted by creator

deleted by creator

Exactly. This is why the AI hype train is overblown. Stop shoving “AI” everywhere when they know it’ll cost a lot in electricity.

The real path forwards with AI will be specialized super advanced models costing hundreds per run (business use case) and/or locally run AI using NPUs, especially the latter.

We must disassemble the solar system and make

paperclipsAI server farmsSo much for hoping ai was going to solve energy breakthroughs.

Got to spend energy breakthroughs to make energy breakthroughs?

I love when people invent something then complain about how dangerous it is. It really hits you in the feels.

In the end, as always, it will only benefit the companies. And all the people get is put out of a job because they have been replaced by some piece of software no one even understands anymore.

wasn’t the same thing said about ATM’s? and then it created the need for banks to hire more employees?

iirc, technology/robots has only been able to create more jobs, right? or am I misinformed?

The difference is the type of the job. Do we want to make jobs available for the general population and requiring minimal training, or do we want to make jobs available only for those with very difficult-to-get engineering degrees?

This is a silly take, people have benefitted hugely from all the big tech developments in the past and will do from ai also - just as you have a mobile phone that can save and improve your life in a myriad of ways so you’ll have access to various forms of ai which will do similar. GPS is a good example, functionally free and making navigation far safer, faster, and better.

Here’s a genuine already happened use case for ai benefitting you, an open source developer was able to add a whole load of useful features to their free software by using AI to help code - I know because it was me, among many many others.

I know people making open source ai tools too and they’re all using AI coding assistants - mostly the free ones. I’ve seen a lot of academic researchers using AI tools also generally built using open source tools like pytorch and with help from ai coding tools. Even if you don’t use ai yourself you’re already benefitting from it, even if you don’t use open source software the services you rely on do.

Imagine being able to implement the most advanced and newest methodologies in your design process or get answers to complex and niche questions about new technology instantly. You buy a printer for example and say to your computer ‘I’ve plugged in a printer make it work’ and it says ‘ok, there isn’t a driver available that’ll work with your pc but I’ve written one based on the spec in the datasheet, do you want me to print a test page?’

Imagine being able to say ‘talk me through diagnosing a fault on my washing machine’ and it guides you through locating and fixing the fault, possibly by designing a replacement part and giving you fabrication options.

Or being able to say ‘this website is annoying, change it so that I only see the video window’ or ‘make a playlist in release order of all abba songs that charted’ or ‘check on currently available archives to see if there’s a mirror of this deleted post’ or ‘check all the sites and see if anyone posted a sub version of the next episode of this anime’ or ‘Keep an eye on this lemmy community and add any popular memes involving fish to my feed but don’t bother with any meta stuff or aquatic mammals’ or ‘this advert says I can make free money, is it ligit?’

The use cases that will directly benefit your life are almost endless, natural language computing is a huge deal even without task based solvers and physical automation but we also have those too so the increased ability of people to make community projects and freely shared designs is huge.

It’s called nuclear energy. It was discovered in 1932 and properly harnessed with an effective reactor that consumes both radioactive material and waste (CANDU) in 1950’s/1960’s and the newest CANDU reactors are some of the safest and most efficient energy generation in the world.

Pretending like there needs to be a larger investment into something like cold fusion in order to run these computers is incredibly dishonest or presenting a clear hole in education coverage. (The DoE should still work on researching cold fusion, but not because of this.)

I love nuclear but China is building them as fast as they can and they’re still being massively outpaced by their own solar installations. If we hadn’t shut down most of the research and construction in the 80’s it would have been great, but it’s not going to be a solution to the huge power requirement growth from EVs and shit like AI in the “short” term of 1-20 years.

It’s important to keep in context who is building them, how they’re being built, and with what oversight they are built.

We are in no way perfect in the west but we are easily a century ahead in insuring build quality and regulatory oversight.

Solar alone can’t meet humanity’s energy needs without breakthroughs in energy storage.

Most energy we use the grid for is generated on demand. That means only a few moments ago, the electricity powering your computer was just a lump of coal in a furnace.

If we don’t have the means to store enough energy to meet demands when the sun isn’t out or wind isn’t blowing, then we need more sources of energy than just sun and wind.

There is a lot of misinformation being perpetuated by the solar industry to fool people like you into thinking all investments should be directed to it over other options.

Please educate yourself before parroting industry talking points that only exist to take people for a ride.

There is growing scientific consensus that 100% renewables is the most cost effective option.

Grid storage doesn’t have the same weight limitations that EVs do, which opens up a lot more paths. Flow batteries, for one, might be all we need. They’re already gearing those up for mass production, so we don’t need any further breakthroughs (though they’re always nice if they come).

Getting to 95% is surprisingly easy; there are non-linear factors at work to getting that last 5%, but you wouldn’t need to use other sources very much at all. The wind often blows when the sun doesn’t shine. We have tons of historical weather data about how these two combine in a given region, which means we can calculate the maximum expected lull between the two. Double that amount and put in enough storage to cover it. This basic plan was simulated in Australia, and it gets there for an affordable cost.

Then we can worry about that last 5%.

Nuclear advocates have been using the same talking points since the 90s, and have missed how the economics have been swept out from underneath them.

Supplying energy isn’t only doing what’s “cost effective.” It’s about meeting demand.

This is why when suppliers have difficulty meeting demand, prices go up.

If we only did what was the cheapest instead of what was required to meet demand, then our demands wouldn’t be met and we would be without energy during those times.

Check the second link again. They were calculating how demand was met over time.

In Australia a mostly open, sparsely populated, continent sized island with vast amounts of sun wind and hydro, with people mostly gathered in a small band of the coast on one side (and still even then needed 1/3 of total generating capacity backed by fossil fuels).

It’s great that oz can maybe get away with almost entirely renewable (maybe, that simulation is essentially just multiplying current generation by a large number, adding some storage and saying that mostly takes generation above demand, it doesn’t do any sort of analysis of when where or how that energy is generated or makes its way to the sources of demand), but it’s not a model for the rest of the world.

Yeah, nuclear has been available and in use over the period of the sharpest increase in co2 emissions. It’s not responsible for it, but it’s not the answer. The average person can’t harness nuclear energy. But all the renewable energies in the world can fit on a small house: wind, solar, hydro. Why bring radioactive materials into this?

We have a system to distribute electricity

But why continue to rely on a system of profit that is being run like a mob, being split into distinct territories where “free market capitalism” can’t even allow us to not get gouged by profit seekers? Why not generate our own power? Why not 100% renewables? Like I said, why bring radioactive materials into this? For that matter, why bring capitalism into it?

My comment was referring to when you mentioned the average person not being able to harvest nuclear energy as an argument against it.

I’m 100% for broad solar adaptation and even laws forcing new homes to be built with them. The other renewables you mention aren’t harvestable by the average person either sadly.

I think nuclear is an important tool for running clean societies. Industries need a lot of power and I can also see mini reactors being bought by small towns for their citizens. It has its uses when the renewables aren’t pheasible but the best is always solar or wind farms and hydro for sure.

Microsoft is actually looking at dedicated SMRs to run AI server farms, but could we fucking not?