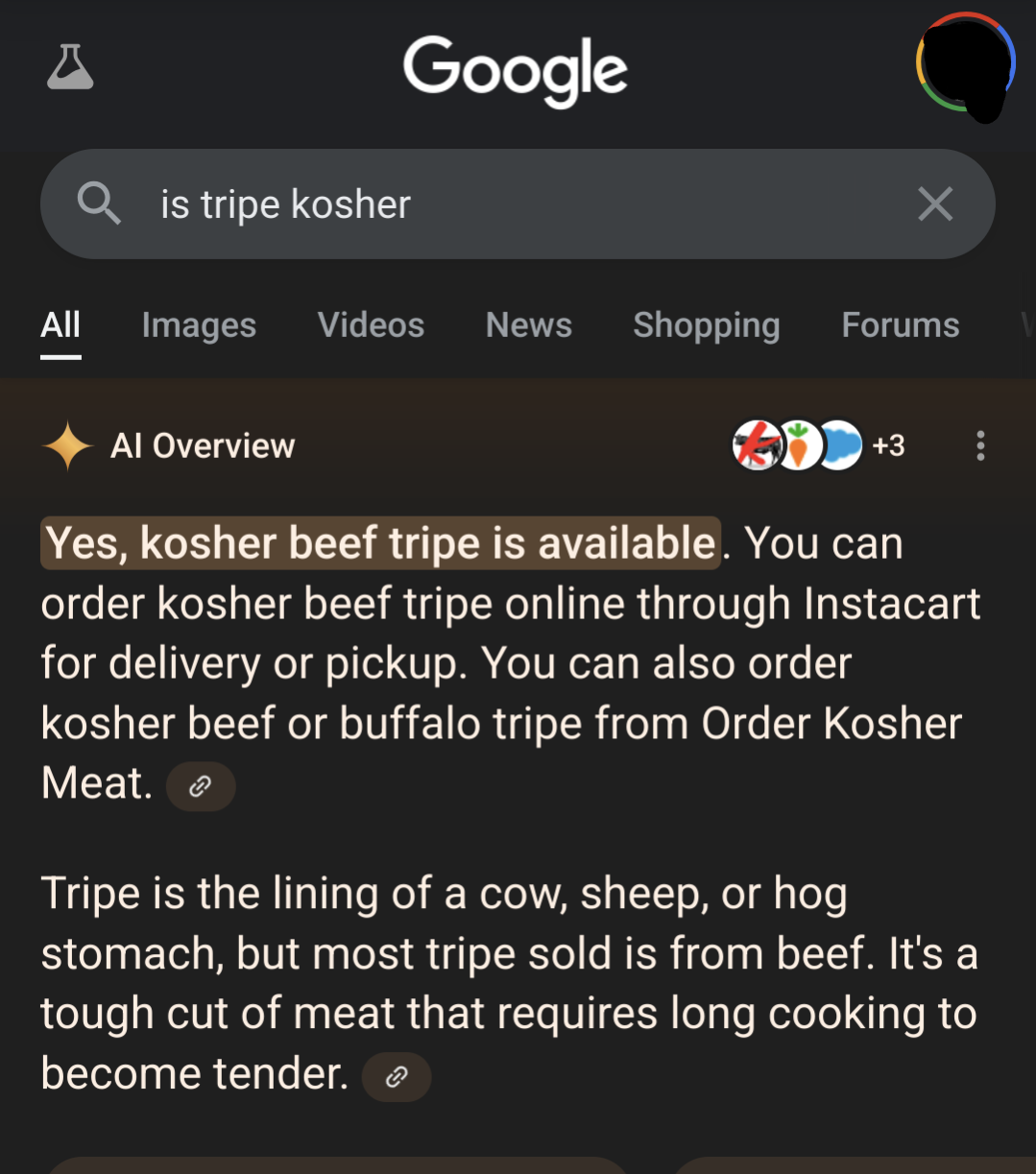

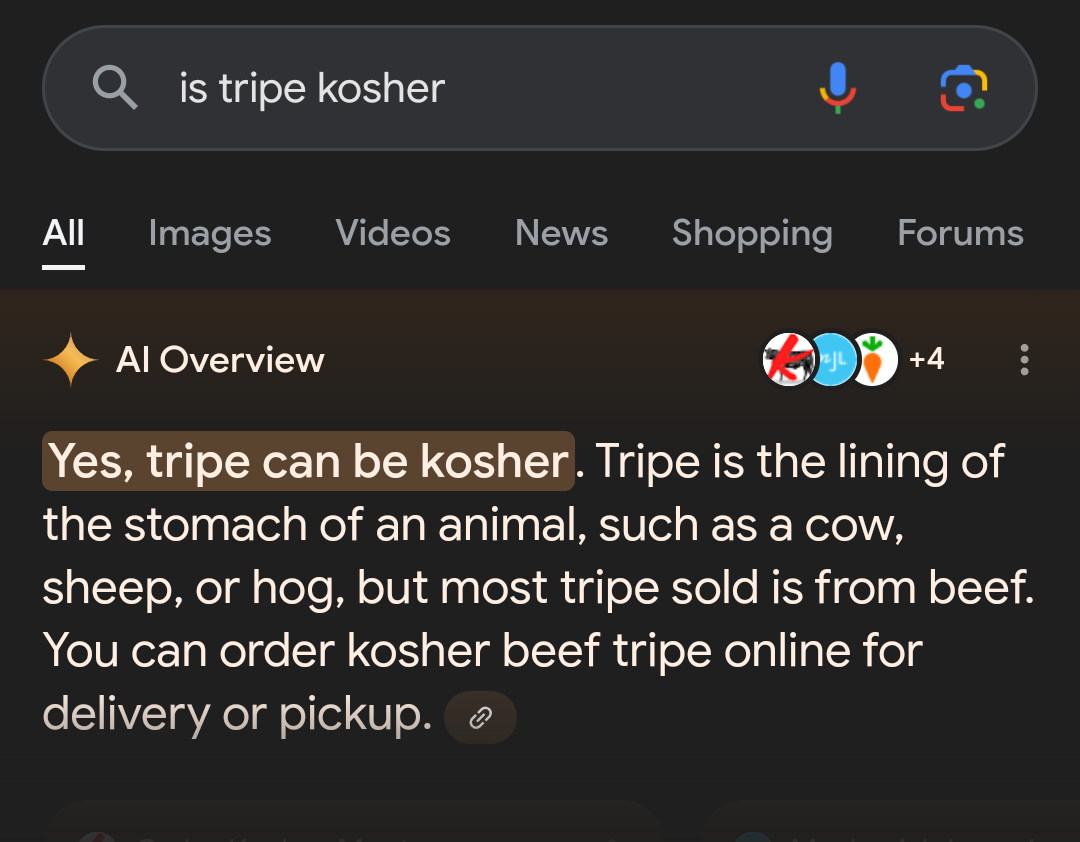

Strange. This is the result I get from Google.

It’s the same result the author of the image had before editing it with inspect element for internet points. You can see the source material on the bottom of the image, seems to match with your result.

Looks like the sources are different: In the top right of the response you can see the fav icons of the sites the AI used to get info. They are

apparently the same three pages, but in a different orderdifferent pages, and in the OP it says +5 vs +3 in the other screenshot.So it looks like they might have dropped a couple of bad sources somewhere along the way. But that doesn’t help those who already got bad answers in the meantime…

Please explain how someone uses inspect element on iOS in what appears to be the default Safari app.

Mobile Safari supports remote debug/remote inspect element on a Mac.

Yeah I don’t think I’ve been able to reproduce a single one of these types of posts yet. I’ve gotten a radically different result several times though

Okay but what if the cow is a Scientologist?

what if it’s moo-slim? or cowtholic?

and what about other farmyard creatures? like the hen-do?

Tao Te Chick

A-goose-stic?

Mulemon (a mule that’s either Mormon or Rastafari, dealer’s choice)?

No respect for water religions, all Jewfish are very disappointed.

“LLM did something silly” must be the most boring type of concern bait out there.

No, it’s not LLM did something silly, it’s google / alpphabet has a defective product which millions of people realy on

I would generally consider anyone who relies on AI to be an incompetent hack

Exactly. a young child could figure this out typically

deleted by creator

Yeah but who cares about people that are stupid enough to rely on shitty “AI” google products

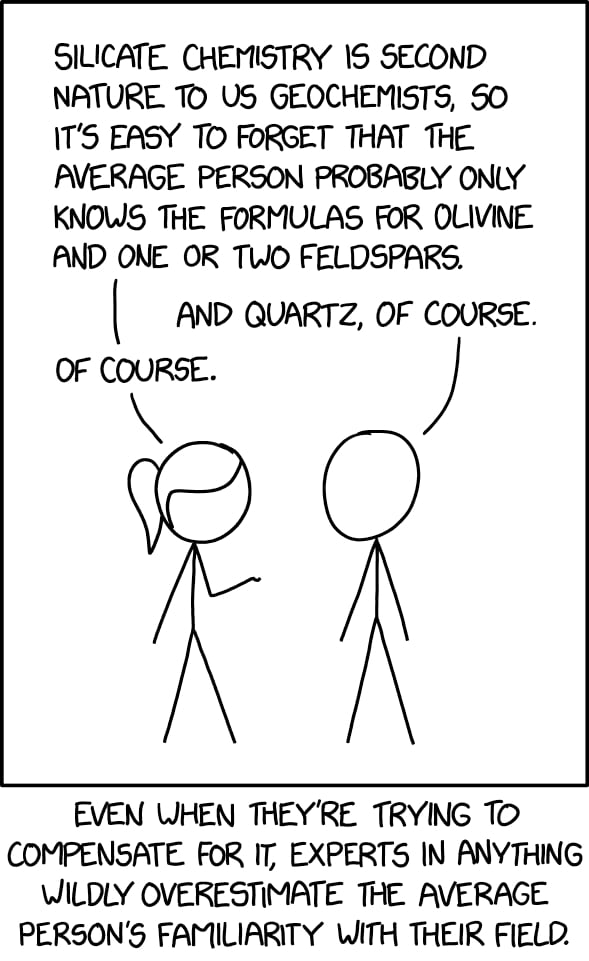

Kind of silly to call people stupid for not following the emergence of LLMs. My friend knows everything there is to know about HVAC from best practices to the actual physics of it and I had to tell him the pitfalls of relying on chatgpt and the like for anything. His boss was raving about it and he’s not a compurer nerd so he thought it was a really advanced search engine. Not stupid, just not his forte.

I get your point but i wouldnt try to install an HVAC system or try to drive a car without a drivers license. I think its fair to expect some degree of critical thinking from adult people. I dont expect non tech poeople to intuitively understand it, but if you spend 5 minutes reading the wikipedia page about chatgpt, then you would realise its not “just” a search engine.

But yeah i know that at the end of the day, the average person has simply been lulled into believing that modern tech is magic and can just be trusted for no reason other than it being popular. So its not really their fault but they are still responsible for their own actions. Trying to shift blame to google, as much as i hate them, is just absurd.

The equivalent of reading the top answer on Google isn’t installing an HVAC system. It’s turning on the AC. I bet most people who do that don’t know much about how they work. If your landlord got the AC replaced with something “new and better” but it still blew cold when you turned it on, I doubt most people do anything different than what they were already doing unless they experienced bad results.

I get your point but i wouldnt try to install an HVAC system or try to drive a car without a drivers license.

You would if you knew nothing about them, and the manufacturing company insists that you can safely and easily use the product with no training.

No. No it is NOT absurd.

If someone, especially an ostensibly reputable company, is selling poison, they should be on the hook for selling poison. Period. End of discussion.

“butbut people should see others dying and not drink the poison!”

No. No, it IS NOT the customer’s fault for being duped by false advertisement. Fuck, you want to live in a hellscape where evil is shrugged off as, “should’ve known better.”

Fuck that, and fuck anyone naive enough to defend such a jouvenile, ignorant position.

They are not offering poison tho. They are offering a product that does exactly what its supposed to do. There is no evil here, there is no wrongdoing. Its just a bad, incomplete system.

Also i havent heard of any promise or advertisement of these things being all knowing perfect information databases. Thats just what people tend to think because it is beyond their comprehension.

The real hellscape would be a world where you cant create anything new because someone might abuse your invention somehow.

Then don’t look up I guess.

The problem on its broadest is if companies put out shitty products and the line still goes up, then the line going up does not mean the economy is producing what we need efficiently. That means the system is obviously broken. Maybe the system never worked in the first place.

The line going up has never meant that a company is working in the publics favour. The system has always been broken, the only way to win is to not rely on big companies and their products as much as you possibly can.

Anyone that unironically relies on LLM outputs to guide their decisions is responsible for the outcome themselves.

People have gotten so mad at me for saying this. Seems like they’re finally getting it

“Man shows that LLMs don’t work the way too many people expect them to.”

Called that one. Hey, this technology is like the game telephone we use to play as children, who wants to invest billions???