- cross-posted to:

- hackernews@derp.foo

- cross-posted to:

- hackernews@derp.foo

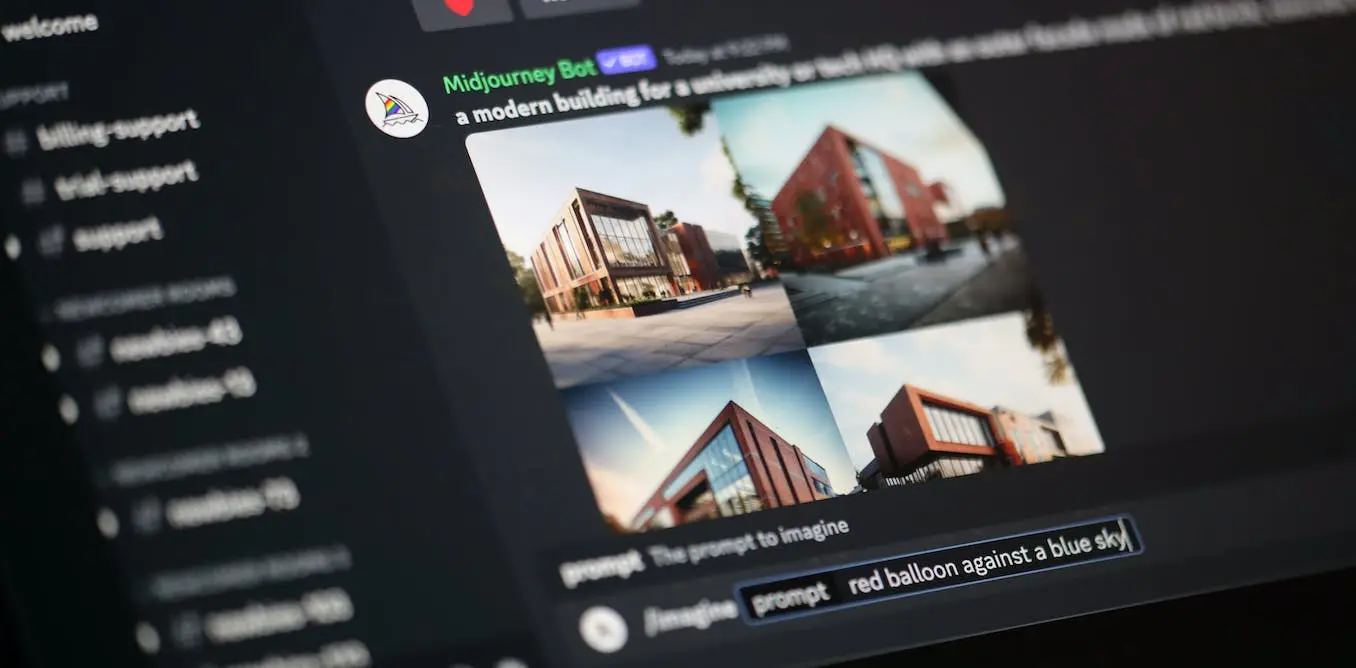

Data poisoning: how artists are sabotaging AI to take revenge on image generators::As AI developers indiscriminately suck up online content to train their models, artists are seeking ways to fight back.

Let’s see how long before someone figures out how to poison, so it returns NSFW Images

You can create NSFW ai images already though?

Or did you mean, when poisoned data is used a NSFW image is created instead of the expected image?

Definitely the last one!