I’m pretty new to selfhosting and homelabs, and I would appreciate a simple-worded explanation here. Details are always welcome!

So, I have a home network with a dynamic external IP address. I already have my Synology NAS exposed to the Internet with DDNS - this was done using the interface, so didn’t require much technical knowledge.

Now, I would like to add another server (currently testing with Raspberry Pi) in the same LAN that would also be externally reachable, either through a subdomain (preferable), or through specific ports. How do I go about it?

P.S. Apparently, what I’ve tried on the router does work, it’s just that my NAS was sitting in the DMZ. Now it works!

I really feel like people who are beginners shouldnt play with exposing their services. When you set up Caddy or some other reverse proxy and actually monitor it with something like fail2ban you can see that the crawlers etc are pretty fast to find your services. If any user has a very poor password (or is reusing a leaked one) then someone has pretty open access to their stuff and you wont even notice unless you’re logging stuff.

Of course you can set up 2FA etc but that’s pretty involved compared to a simple wg tunnel that lives on your router.

For now I’m only toying around, experimenting a little - and then closing ports and turning my Pi off. I do have my NAS constantly exposed, but it is solidly hardened (firewall, no SSH, IP bans for unauthorized actions, etc. etc.), fully updated, hosts no sensitive data, and all that is important is backed up on an offline drive.

My mantra is “plan to be hacked”. Whether this is a good backup strategy, a read-only VM, good monitoring or serious firewall rules.

What are you running?

If it is http based use a reverse proxy like Caddy

Update: tried Caddy, love it, dead simple, super fast, and absolutely works!

For now just some experiments alongside NAS

Planning to host Bitwarden, Wallabag and other niceties on the server, and then when I get something more powerful, spin up Minecraft server and stuff

The synology NAS can act as a reverse proxy for stuff inside your network. I don’t have mine in front of me, so you will have to google the steps, but basically you point the synology to an internal resource and tell it what external subdomain it should respond to.

Yes, I know where this feature is in the settings, but it’s got its own issues and I also turn the NAS off for the night, so it’s not an option for me.

Why do you turn off the NAS at night? Reminds me of my grandparents turning off the wifi at night.

Drives are somewhat noisy (even though I took fairly quiet ones) and I appreciate total silence at night. Unfortunately, I don’t have many places to put it outside my single room, so there’s that.

I’d love to move to SSDs for storage at some point (I know it’s controversial, but they would fit my use case better), but for now it’s too expensive for me.

Ahh, that’s valid. I’ve been wanting to build a (relatively) small 16TB SSD NAS for video editing, after which I could dump footage to my main NAS. SSD NAS systems can definitely make sense depending on your use case. Hell, you can even game off of them if you’ve got 10gig networking.

I’d love to eventually have a 10gbps LAN, yep :)

I’d also love to explore the technology going into cloud gaming, so not only would I launch games using files laying on the server, but could actually play them everywhere from my energy efficient potato laptop :D

But that’s long ahead and more of an “if it even works properly”

then its not selfhosting.

In what way? It is a physical server located in my bedroom, sharing resources online.

Dude above you over is under the perception that it requires 100% uptime or other users to to be classified, which is wrong. You are definitely self hosting, albeit only for yourself I assume. Which is fine

yes. i ment the uptime. to me hosted means i can reach it in a digital way any time even if it is just wake on lan. but if you guys say some device running 8hrs a day is hosting I am fine with that.

Yep, sharing stuff for others requires more expertise, as I’ll get responsible for other people’s experience. If I screw something up now, only I will be affected.

And you are self-sufficient, or whatever the word is. But that’s the key thing for me, not having to rely on others for my services :)

Yep!

For me it’s a sense of reliability and control - my stack will keep working even if new censorship rolls out (I live in a heavily censored and sanctioned jurisdiction), or if there’s a global outage, or whatever else. I am also the sole authority over my piece of the Internet, and no one can do anything to alter it or take it away.

Good to hear you figured it out with router settings. I’m also new to this but got all that figured out this week. As other commenters say I went with a reverse proxy and configured it. I choose caddy over nginx for easy of install and config. I documented just about every step of the process. I’m a little scared to share my website on public fourms just yet but PM me ill send you a link if you want to see my infrastructure page where I share the steps and config files.

Thanks, I will! Wise of you not to share it publicly for security reasons

Welcome to the wonderful world of reverse proxies!

You already have a lot of good answers … but I got one more to add.

I have a very similar setup on my homelab and I’m using a Cloudflare tunnel.

It’s a free service and it’s really good because it allows you to expose web services and specific ports for remote access over dynamic IPs without having to expose your own router.

https://developers.cloudflare.com/cloudflare-one/connections/connect-networks/

Thanks! I got that advice as well, but I would like to keep it self-hosted - I consider using Pangolin on a VPS for that purpose going forward: https://github.com/fosrl/pangolin

Also, beware of the new attack on Cloudflare Tunnel: https://www.csoonline.com/article/4009636/phishing-campaign-abuses-cloudflare-tunnels-to-sneak-malware-past-firewalls.html

This attack targets end users, not Cloudflare tunnel operators (i.e. self-hosters). It abuses Cloudflare Tunnels as a delivery mechanism for malware payloads, not as a method to compromise or attack people who are self-hosting their own services through Cloudflare Tunnels.

Thanks for clarification!

Whispers “try proxmox”

I will eventually!

But for all I understand, it is to put many services on one machine, and I already have a NAS that is not going anywhere

I’ve gone the other way. I used to run a Proxmox cluster, then someone gave me a Synology NAS. Now it’s rare that I spin up Proxmox and instead use a mix of VMs, containers and Synology/Synocommunity apps.

Interesting!

But I don’t want to mix it too much. I do have a Docker on it with just some essentials, but overall I’d like to keep NAS a storage unit and give the rest to a different server.

I treat NAS as an essential service and the other server as a place to play around without pressure to screw anything

You need a reserve proxy. That’s a piece of software that takes the requests and puts them toward the correct endpoint.

You need to create port forwards in the router and direct 80 and 443 (or whatever you’re using) toward the host of the reverse proxy and that is listening to on those ports. If it recognized the requests are for nas.your.domain, it will forward the requests to the NAS.

Common reverse proxies are nginx or caddy. You can install it on your raspberry, it doesn’t need it’s own device.

If you don’t want that, you can create different port forwards on your router (e.g. 8080 and 8443 to the Raspi) and configure your service on the Raspi corresponding. But it doesn’t scale well and you’d need to call everything with the port and the reverse proxy is the usual solution.

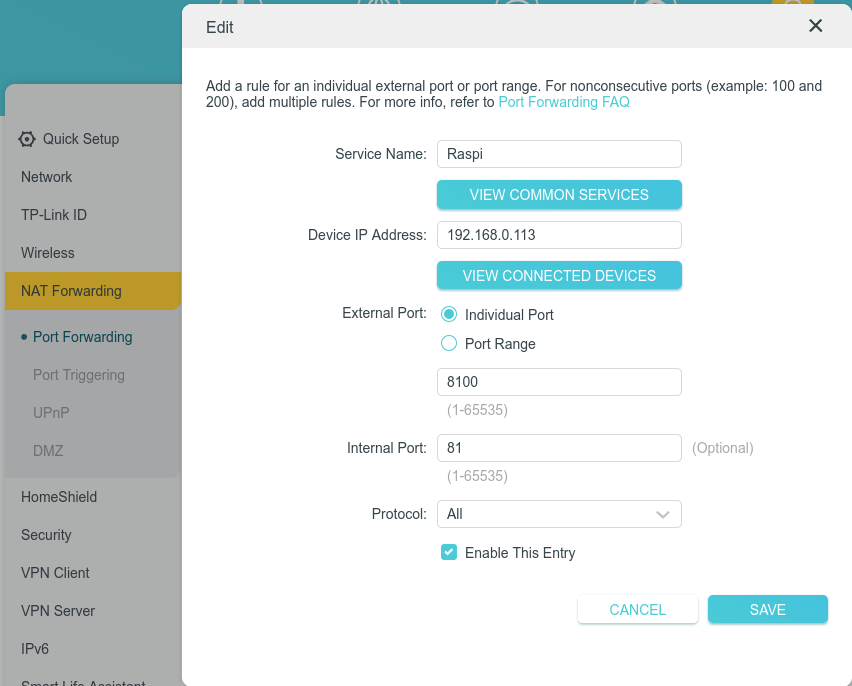

There’s an issue with that first part. Do I configure it right? Should <domain>:8100 be redirected to 192.168.0.113:81 in this case?

External 80 to internal 80 and external 443 to internal 443

With this config you don’t have to deal with ports later, as http is 80, https is 443 by default.

If you run some container on port 81, you have to deal with that in the reverse proxy, not in the router. E.g. redirect something.domian.tld to 192.168.0.103:81

If you use docker check out nginxproxymanager, it has a very beginner friendly admin webui. You shouldn’t forward the admin ui’s port, you need to access it only from your lan.

Actually, I do - 81 is exactly the default port for nginx proxy manager. I just tried to expose it as a testing example, and already closed it back after a success (apparently port forwarding worked just fine, it’s just that DMZ messed with it)

And since we’re talking about this, what do I do with it next? I have it on my Pi, how do I ensure traffic is distributed through it as a reverse proxy? Do I need to expose ports 80 and 443 and then it would work automagically all by itself?

You type the ip of the rpi on the router, so from an external call the router will forward it to the rpi. Or I don’t know what is your question.

Things may seem automagical in the networking scene, but you can config anything the way you want. Even in nginxproxymanager you can edit the underlying actual nginx configs with their full power. The automagic is just the default setting.

Where do I type rpi’s IP, just in port forwarding? Or somewhere else?

I want for Nginx proxy, controlled through the Manager, to direct traffic to different physical servers based on subdomain.

I put in nas.my.domain and I get my Synology on its DSM port. I put in pi.my.domain and I get a service on my Pi.

It seems you are missing some very basic knowledge, if you have questions like this. Watch/read some tutorials to get the basics, than ask specific questions.

This guy does the same thing as you: https://www.youtube.com/watch?v=yLduQiQXorc

This was like the 3rd result for searching for nginxproxymanager on yt.

Guess I am going ahead of myself, yes, which gets even more complicated by having another server (Synology NAS) already installed and messing with networking a little, as internal settings appear to expect the NAS to be the only exposed thing on the network.

Thanks for the link! I’ve seen that thumbnail, but most guides are solely focused on actually installing Nginx Proxy Manager, which is the easy part, and skip the rest, so I glanced that one over.

P.S. Looks like I did everything right, I just need to sort my SSL stuff to work properly.

VPN is definitely the way to go for home networks. Your router even has one built in. OpenVPN and Wireguard are good.

If you really want to expose stuff like this the proper way is to isolate your home network from your internet exposed network using a VLAN. Then use a reverse proxy, like caddy and place everything behind it.

Another benefit of a reverse proxy is you don’t need to setup https certs on everything just the proxy.

You do need a business or prosumer router for this though. Something like Firewalla or setting up a OpenWRT or OPNsense.

Synology also has there quick connect service as well. While not great if you keep UPNP off and ensure your firewall and login rate limiting is turned on it may be better then just directly exposing stuff. But its had its fair share of problems so yeah.

Consider not self hosting everything. For example if all your family cares about is private photo storage, consider using a open source E2EE encrypted service for photos on the cloud like Ente Photos. Then you can use VPN for the rest. https://www.privacyguides.org/ has some recommendations for privacy friendly stuff.

Also consider the fallout that would happen if you are hacked. If all your photos and other things get leaked because your setup was not secure was it really any better than using big tech?

If nothing else please tell me you are using properly setup https certs from Let’s Encrypt or another good CA. Using a firewall and have login rate limiting setup on everything that is exposed. You can also test your SSL setup using something like https://www.ssllabs.com/ssltest/

No truly private photos ever enter the NAS, so on that front it should be fine.

VPN is not an option for several reasons, unfortunately.

But I do have a Let’s Encrypt certificate, firewall and I ban IP after 5 unsuccessful login attempts. I also have SSH disabled completely.

SSL Test gave me a rating of A

Who is externally reaching these servers?

Joe public? Or just you and people you trust?If it’s Joe public, I wouldn’t have the entry point on my home network (I might VPS tunnel, or just VPS host it).

If it’s just me and people I trust, I would use VPN for access, as opposed to exposing all these services publicly

Just me and the people I trust, but there are certain inconveniences around using VPN for access.

First, I live in the jurisdiction that is heavily restrictive, so VPN is commonly in use to bypass censorship

Second, I sometimes access my data from computers I trust but can’t install VPN clients on

Third, I share my NAS resources with my family, and getting my mom to use a VPN every time she syncs her photos is near impossible

So, fully recognizing the risks, I feel like I have to expose a lot of my services.

Remember that with services facing public internet it’s not about if you get hacked but when you get hacked. It’s personal photos on someone elses hands then.

Not sure why you’re downvote, you’re absolutely right. People scan for open ports all day long and will eventually find your shit and try to break in. In my work environment, I see thousands of login attempts daily on brand new accounts, just because something discovered they exist and want to check it out.

Those who have not been burned yet often don’t expect it to happen to them. Usually it isn’t anything big causing it but some typo in a config or software not updated on time.

I do remember that and take quite a few precautions. Also, nothing that can be serioisly used against me is in there.

I have wrestled with the same thing as you and I think nginx reverse proxy and subdomains are reasonably good solution:

- nothing answers from www.mydomain.com or mydomain.com or ip:port.

- I have subdomains like service.mydomain.com and letsencrypt gives them certs.

- some services even use a dir, so only service.mydomain.com/something will get you there but nothing else.

- keep the services updated and using good passwords & non-default usernames.

- Planned: instant IP ban to anything that touches port 80/443 without using proper subdomain (whitelisting letsencrypt ofc), same with ssh port and other commonly scanner ones. Using fail2ban reading nginx logs for example.

- Planned: geofencing some ip ranges, auto-updating from public botnet lists.

- Planned: wildcard TLS cert (*.mydomain.com) so that the subdomains are not listed anywhere maybe even Cloudflare tunnel with this.

Only fault I’ve discovered are some public ledgers of TLS certs, where the certs given by letsencrypt spill out those semi-secret subdomains to the world. I seem to get very little to no bots knocking my services though so maybe those are not being scraped that much.

Pretty solid! Though insta-ban on everything :80/443 may backfire - too easy to just enter the domain name without subdomain by accident.

Could be indeed. Looking at the nginx logs, setting a permaban on trying to access /git and a couple of others might catch 99% of bots too. And ssh port ban trigger (using knockd for example) is also pretty powerful yet safe.

Your stuff is more likely to get scanned sitting in a VPS with no firewall than behind a firewall on a home network

Why wouldn’t you setup a firewall on the VPS?

Unless your home internet is CG-NAT, both have a publicly accessible IP address, so both will be scanned

If you mean HTTP server, what you need is a reverse proxy and name-based virtual hosts. I usually use nginx for such tasks, but you may choose another web server that has these features.

Thanks!

I recommend caddy as a webserver, it’s very powerful, but the config is super simple compared to old school stuff like nginx or apache.

Heard quite a few positive reviews on that one, thanks!

You can either:

A) Use a different port, just set up the new service to run on a port that’s not used by the other service.

B) If it’s a TCP service use a reverse proxy and a subdomain.

Router gets the public IP. Login to it, find port forwarding option. You’ll pick a public port. IE 443 and forward it to a local IP:port combo, IE 192.168.0.101:443.

Then you can pick another public port and forward it to a different private IP:port combo.

If you want a subdomain, you forward one port to one host and have it do the work. IE configure Nginx to do whatever you want.

EDIT: or you use IPv6. Everything is a public IP.

Thanks!

Looks like you got it! Congrats.

Yup

If you go with IPv6, all your devices/servers have their own IP. These IPs are valid in your LAN as well a externally.

But it’s still important to use a reverse proxy (e.g. for TLS).

Oh, nice! So I don’t have just one, but many external IPs, one for every local device?

Yes, even IPv4 was intended to give each device in the world their own IP, but the address space is too limited. IPv6 fixes that.

Actually, each device usually has multiple IPv6s, and only some/one are globally routable, i.e. it works outside of your home network. Finding out which one is global is a bit annoying sometimes, but it can be done.Usually routers still block incoming traffic for security reasons, so you still have to open ports in your router.

Nice to know!

NAT translation, i use my openwrt router for that

OpenWRT also has great IPv6 support