As they correctly point out, humanity has a long history of “offloading” cognitive tasks to new technologies as they emerge and that people are always worried these technologies will destroy human intelligence.

There’s a significant difference here and that is the fact that we are attempting to automate the cognitive process we call “thinking.”

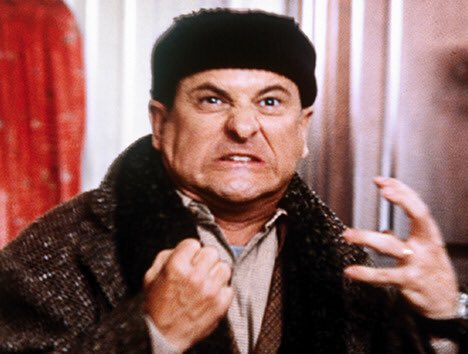

I continue to never fuck with any of this shit, based on an intuitive understanding that it is of evil, which I cannot fully explain but which has paid off as a judgement

Same problem exists in the self driving space.

Level 2 partial automation is ADAS or Advanced Driver Assistance Systems. Some Level 2 features actually harm driver attention like:

- Adaptive cruise control

- Collision avoidance systems

- Lane centering

- Lane change assistance

Generative AI tools […] are the latest in a long line of technologies that raise questions about their impact on the quality of human thought, a line that includes writing (objected to by Socrates), printing (objected to by Trithemius), calculators (objected to by teachers of arithmetic)

Not this horse shit again. I genuinely feel like I’m losing my mind, how do we people so flippantly ignore the context in which these technologies were introduced

It would be leagues different if we were talking about a tool but I think a 500 billion dollar investment more so constitutes a weapon 🤔

I fucked around w chatgpt a couple months ago just to see WTF, and frankly I can’t find a use for it. I have no questions that need AI to answer. I have no routines that I need AI to plan, meal programs to figure out, code to write, etc. I’m not sure if it’s because I lack the requisite intelligence to have questions that cannot be conventionally answered or I just dgaf.

Technology should be used to enhance the ability of a person and better ones life, not destroying us

That said, what’s the opinion on using them to write cover letters for bullshit job applications?